Introduction

Traditional streaming protocols, such as RTSP and RTMP, support low-latency streaming. These are quite complex to implement and I did not find any efficient library which would work properly on a microcontroller e.g. on an ESP32. I tried to migrate the live555 project, but so far I did not manage to have it working properly.

We could use Bluetooth with my A2DP library but the lag (of more then half a second) is too big to be useful if we want to use an instrument and live playing. If you do not care about latency, it is however still an good option.

Finally you can also just implement a Webserver which just returns audio data as demonstrated in these examples

So I thought it is best to make one step back and just use regular communication protocols to send Audio just as raw binary data over the wire.

Data Synchronization

In theory, if we send the data out in one defined rate and we process output it on the receiving system with the same rate, we should be fine. In practice however if the clocks and speeds of both systems are not synchronized, we will run into buffer overflows or underflows. It is thus far easier to send the data as fast as possible and just block on the receiving system when the buffer is full. This approach works well with all recorded data or data which is created via DSP algorithms. This is by the way also how A2DP works!

Latency can be controlled with the buffer size: A small buffer size leads to a low latency.

Data Representation

On Microcontrollers, audio data is usually just a stream of int16_t data. A problem is, that different processors represent numbers in different ways: Endianness is the order or sequence of bytes of a word of digital data in computer memory. It is primarily expressed as big-endian or little-endian. To simplify things we assume that we just exchange data between systems with the same endianness!

Protocols

TCP/IP

This is by far the simplest solution. We can use TCP/IP to push audio data from the sender to a receiver. If we use blocking I2S writes, the protocol will make sure that the sending is stalled when the I2S buffer is full. So we do not need to provide any specific synchronization logic and we can control the latency with the I2S buffer size.

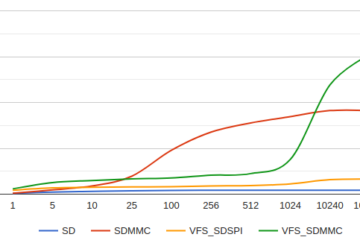

First I tested the sending speed to my McBook with the help of netcat: “nc -l -p 8000 > /dev/null”: I was getting a thruput of 32000 to 37000 bytes per second!. This corresponds to a sample rate of 8000 using stereo and 16000 samples per second in mono using int16_t data.

To my disappointment however I will get on the receiving side just 3000 bytes per second (which some bursts where it is getting to normal speed: around 32000 bytes per second). So there is definitely something wrong on the receiving side!

Strangely the related tests with netcat (e.g. nc -w 2 192.168.1.39 8000 < x.data) were giving real good rates around 130’000 bytes!

So it seems that the issue is only in the transmission between 2 ESP32 devices!

After some research I found that the issue is related to the modem sleep setting: calling esp_wifi_set_ps(WIFI_PS_NONE) disables modem sleep entirely and with this I am getting a stable 32000 bytes per second!

With this low band width however we will need to use a codec to get some reasonable sampling rates.

In the next blog we will looking at ESP-Now…

2 Comments

Clément · 3. November 2024 at 10:48

Hi !

I discovered your fantastic Audio Tools for my esp32 projects. Thanks a lot for the work !

On this subject of Audio over IP, I’m wondering if you ever tried the Audio Over Osc project (https://git.iem.at/aoo/aoo) ? Works like a charm on OS platforms, but not yet for esp32…

Best regards

pschatzmann · 3. November 2024 at 10:49

Yes, this is one of the many things that I still have on my todo list.