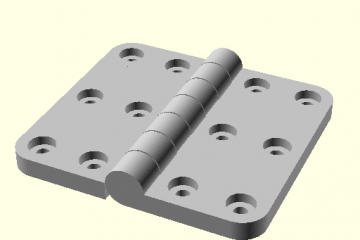

Hinges in Scad4J

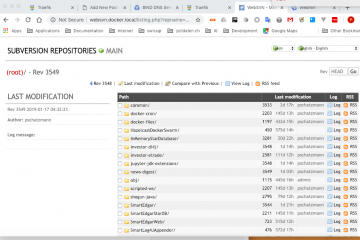

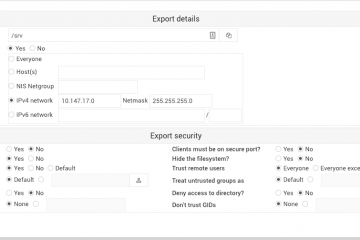

I am about to design a model RC plane. In order to attach the control surfaces to the body I am planning to use some hinges. Fortunately there is a ‘Parametric Hinge’ written by ‘Rohin Gosling’ that can be used in OpenSCAD. In this Blog I will check out how we can use this in Scad4J. Here is a quick Gist that demonstrates how we can use this library in Scad4J.